What is the Future of Computational Storage?

It’s been just over five years since the world was formally introduced to “computational storage.” The term existed for some time before that, appearing in 2012 and even earlier, but 2018 was when it got a formal start and backing in the market. At the 2018 Flash Memory Summit (FMS), three small startups banded together, assembled a room of industry veterans, and decided to launch a standardization effort with SNIA. [1]

We open with this bit of history to point out that, while people today are already wondering if the term will survive, the fact is, in this industry, nothing starts overnight. It takes time, nurturing, support, common frameworks, and more. This is true for many now-known solutions in the data storage market. Just think back to NVM Express (NVMe) vs SCSI over PCIe (SOP). That was not so long ago.

Market changes affecting computational storage

With that preface, let’s discuss the question of the day, “Does computational storage have a future?” It seems like a silly thing to discuss here but, really, with the advent of so much change in the market today, it has become a real concern for some industry professionals: From both those who are interested in seeing it move forward and from those who oppose it. Why the concern, you ask? Well, there are other innovative solutions coming to bear that include Compute Express Link (CXL), and Computational or Compute in Memory, which have a slew of acronyms (CIM, PIM, AIM, and more).

1. The market is diverse enough to include multiple solutions

What some fail to realize is that these technologies are not competing with one another, rather they are each finding positions in which to solve the bigger challenge. While we are fast approaching the end of Moore’s Law for semiconductors, we have already reached the end of the Von Neumann Architecture as well. Where once we had “CPU + Memory + Storage” we now have Compute in many, many places.

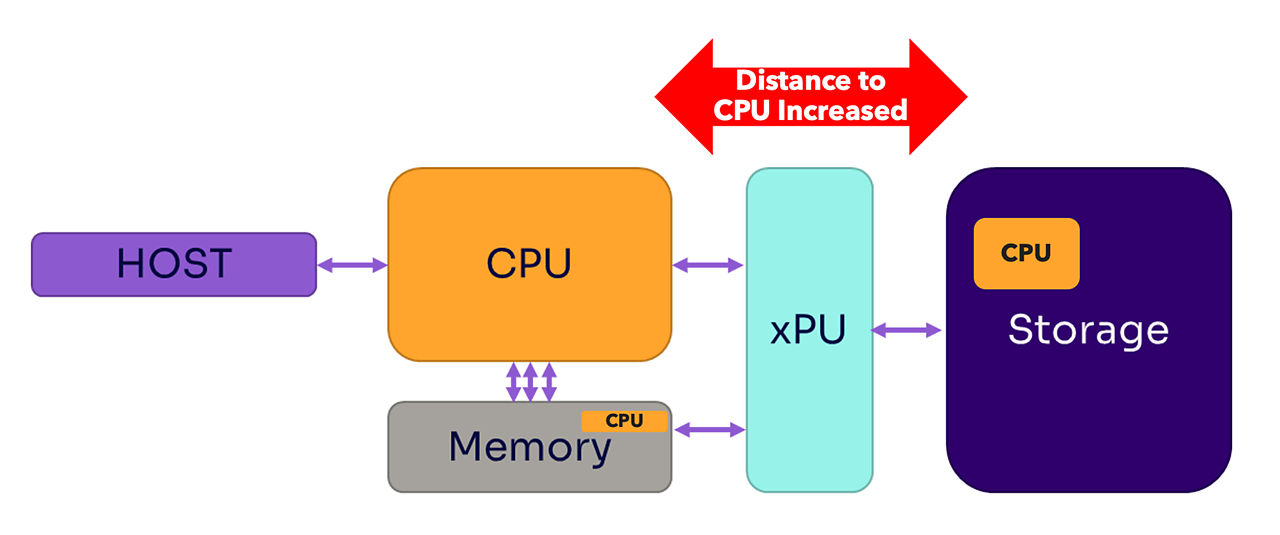

Figure 1. Compute finding its place data processing

Just look at a snapshot in time to see how NVIDIA was in the right place at the right time with a product they didn’t even realize was going to be such a staple in modern compute: the essential GPU. This example highlights the continued opportunity for solutions like computational storage and even computational ‘anything’.

When the GPU-attached systems first started, no one saw them as a threat to the compute layer. After all, they were for graphics, right? Well, it seems when you name a technology that directly mentions your partners (compute vs computational), some will try to see that as a replacement effort, not a supporting or augmentation solution, which is actually the case.

Computational storage now has a ratified architectural design from SNIA, a complete API to support that work, and the NVM Express working group about to finalize the first formal command set on a protocol to enable it. That, in its own right, is a huge accomplishment in a five-year window. NVMe took longer to get in place, as a reference point. So, the simple answer to the question at hand is: yes, computational storage 1) has a home, 2) will have a home, 3) is not a solution without a problem, and 4) will create a better ecosystem as the market and technologies move forward.

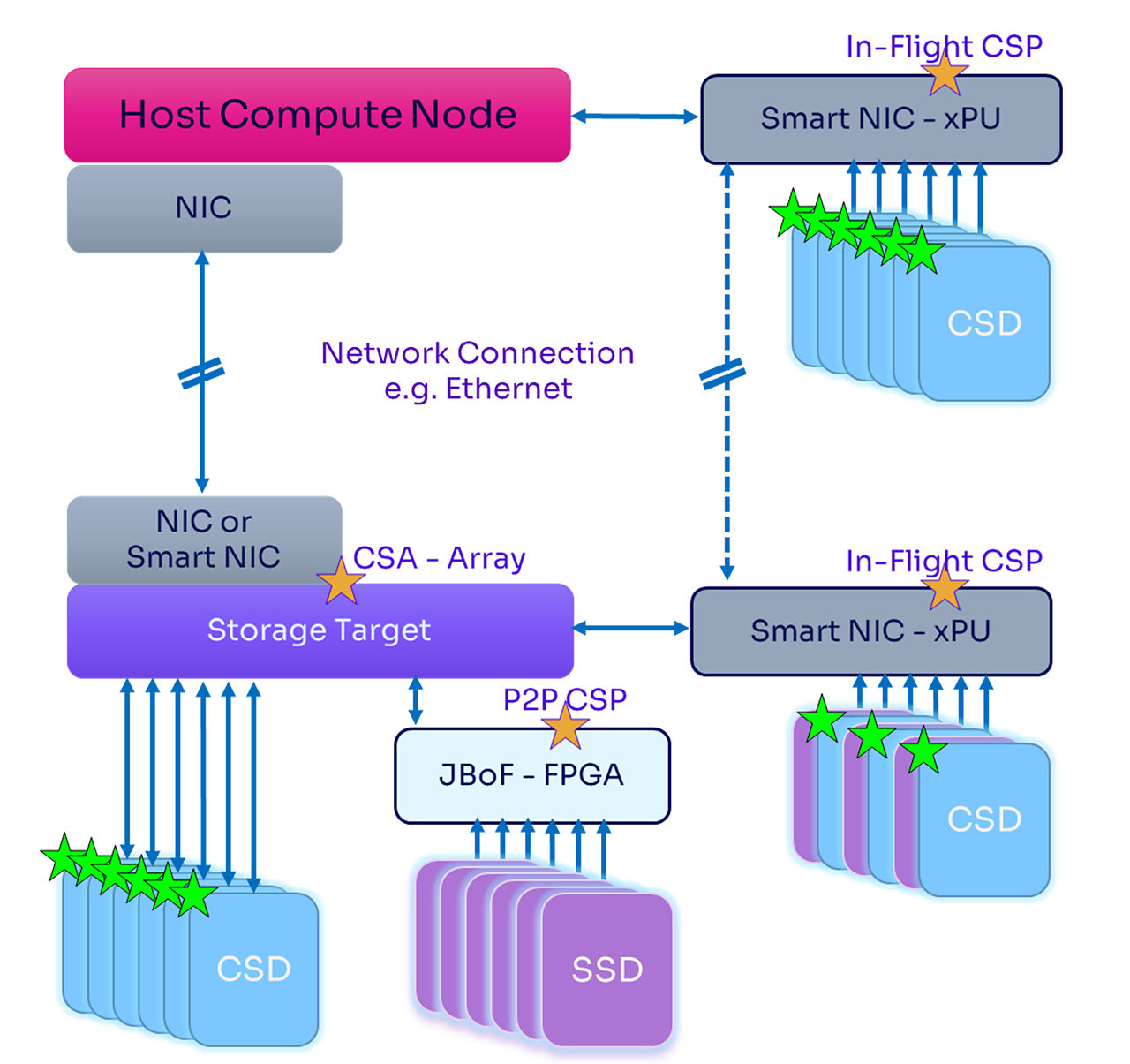

Figure 2. Pathways for computational storage solutions

Computational storage has certainly had its bumps along the way—as well as starts and stops (due to a once-in-a-lifetime pandemic)—but it will exist, it is needed, and it will start growing very soon. While we would have loved to see this technology take off in 2020, there is no doubt that adoption will increase markedly before the end of the decade.

2. The computational storage platform

Computational storage is a platform that provides users the ability to take data local to the storage device, which has grown from 128GB to 128TB in size over the past 15 years [2], and manage, analyze, and modify that data locally. This is a perfect solution for Data Gravity—the amount of energy and time required to move a bit of data from point A to point B. This simple fact proves that we are not removing, replacing, or materially changing the existing architecture; we are simply creating a value-added layer of compute where it is most needed: next to the data.

3. Transformative storage for data

Now, some might say “Well it had to be put there, so why not do the compute before you store it?” The simple answer is: “Sure. Why not?” If you can work on the data before you store it, great. But at some point, that data you have now stored will be needed in a new form, so why not transform it where it already sits? Let’s take a look at an example to explore this notion further.

Think of a book, where data is ‘created’ and ‘changed’ in a real-life mathematical solution and then recorded for history with letters on a page. Those letters represent the data you are storing today. Sometime down the road, if someone reads about that math problem, works on it, and finds a proof or a rebuttal for it …. We must now ‘revise’ the data in the book by creating a whole new book. Data in, data out, modify, data back in. Well, with today’s world and access to more sophisticated means of storage, we can remove several steps: Data into a Computational Storage Drive (CSD), modify it in place, restore. Process simplified.

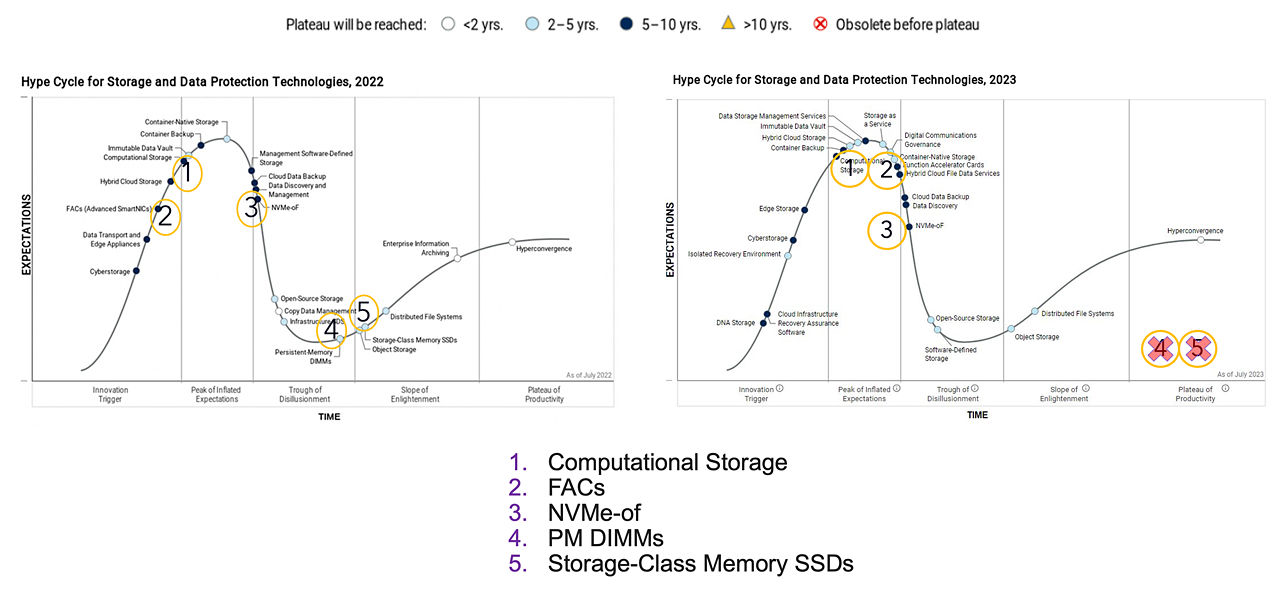

"But is that super-slow?” readers may ask. Well, for those that need it done “now”—that is where GPU, PIM, and other technologies are ideal. They all complement one another rather than compete. CXL, PIM, CSD, CIM, AIM, GPU, DPU, CPU, DRAM, HBM, you name it—they are all pieces of a puzzle that needs to be aggressively looked at for efficiencies and usefulness (rather than just accepting the status quo). You can see how these pieces work together and evolve in the ecosystem by looking at the tracking of these technologies in the Gartner Hype Cycle changes over the last two years.

Figure 3. “Official” Gartner Hype Cycle for Storage for computational storage

It has been said that the definition of insanity is to do the same thing over and over, expecting a different outcome. We need to take a hard look at the compute infrastructures we have today and overcome the insanity of just throwing more of the same at a hard problem and, instead, move to a more elegant solution to provide a better value. Yes, change is needed in many places within the system architecture to truly adapt to these innovative technologies. But we as an industry must start somewhere and drive that change.

Solidigm is driving the evolution of computational storage

Here at Solidigm, we are driving that change. Thinking outside that simple “SSD Box” and exploring technologies that support and improve the off-the-shelf solutions of today, creating opportunity for new markets, new solutions, better value, and more effective use of the tools that are in the global toolbox.

If you have found this an interesting read, please check out my backup slides that I presented at Flash Memory Summit 2023 and drop me a line to discuss further.

Notes

[1] Online SNIA Dictionary | SNIA (Storage Networking Industry Association)

[2] https://www.pcworld.com/article/472983/evolution-of-the-solid-state-drive.html

About the Author:

Scott Shadley is a Director of Strategic Planning at Solidigm. He has spent over 25 years in the semiconductor and storage space in MFG, Design, and Marketing. His experience spans 17 years at Micron. He spent time at STEC, where he was critical to the growth of the startup to acquisition. His efforts have helped lead to products in the market with over $300M in revenue. With over $2B in overall program revenues over the last 10 years. He recently spent time at NGD Systems driving Computational Storage Technology. He is an avid self-proclaimed geek and enjoys Star Trek and Star Wars lore. He enjoys spending time with his kids (now both in college) and playing mobile games. Scott likes his cell phone and the related technology and has a long history on the evolution of the market. His work in volunteer organizations, both work and non-work, keeps him busy.